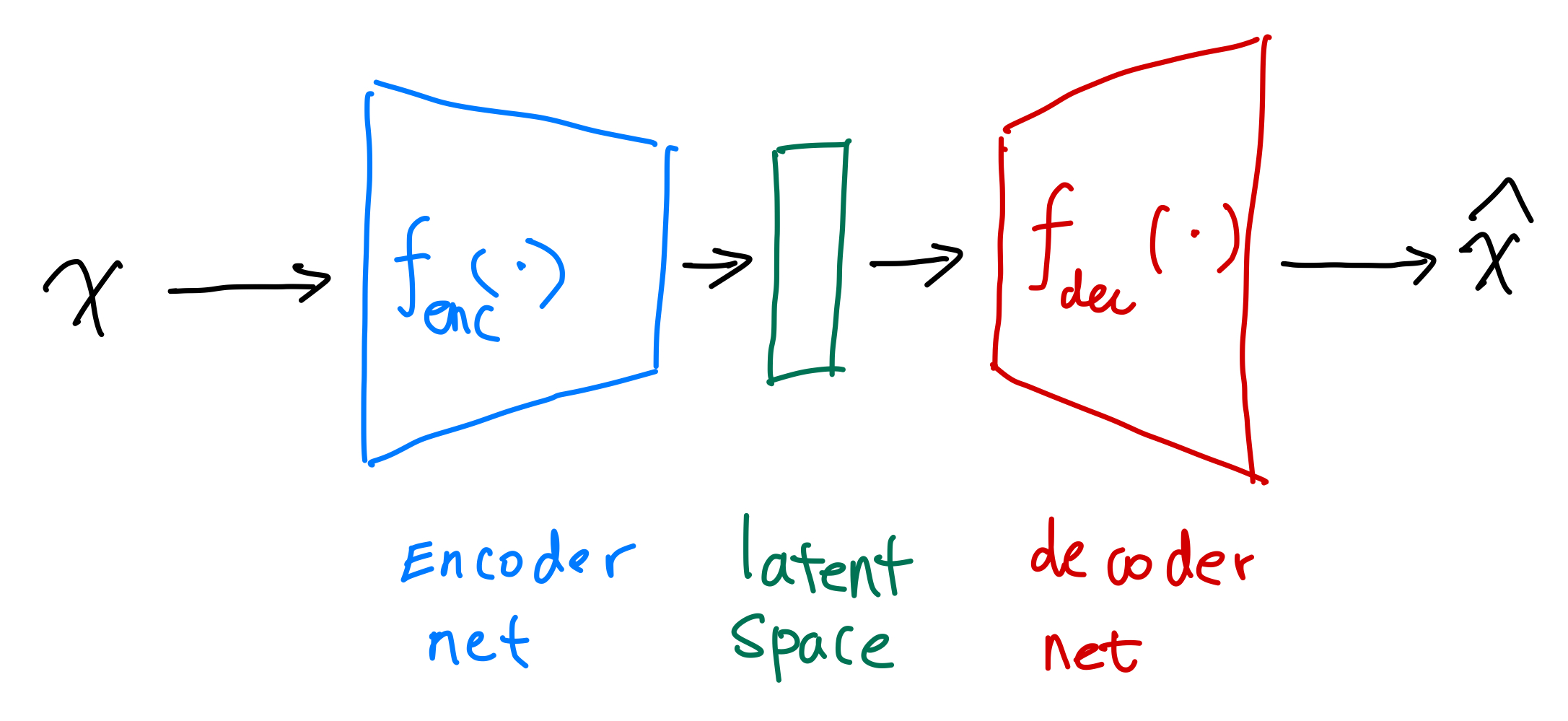

Generative Model: Auto-Encoder

Autoencoders (AE) are machines that encodes inputs into a compact latent space.

The simplest auto-encoder is rather easy to understand.

The loss can be chosen based on the demand, e.g., cross entropy for binary labels.

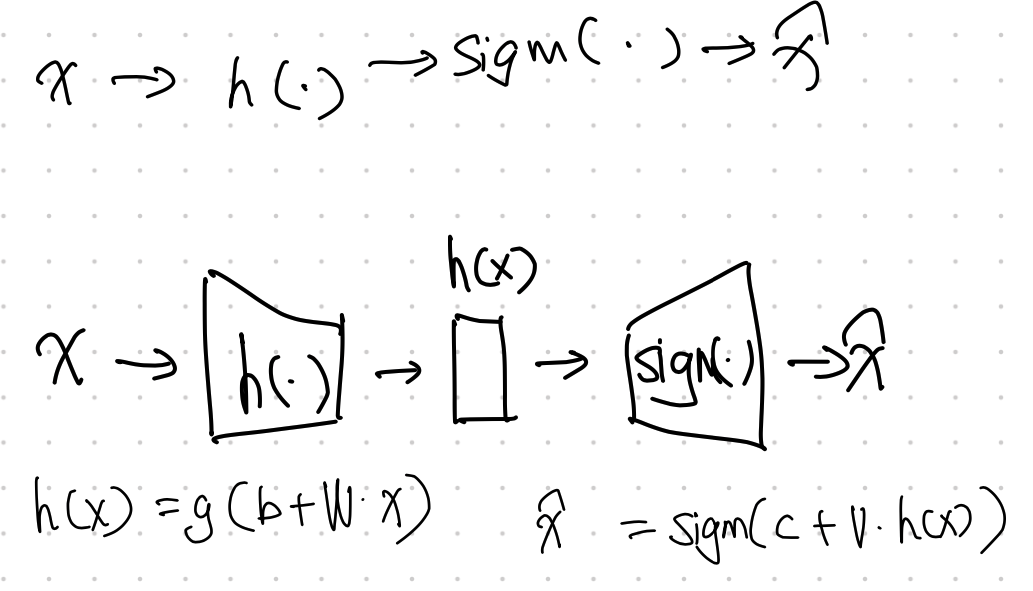

A simple autoencoder can be achieved using two neural nets, e.g.,

$$ \begin{align} {\color{green}h} &= {\color{blue}g}{\color{blue}(}{\color{blue}b} + {\color{blue}w} x{\color{blue})} \ \hat x &= {\color{red}\sigma}{\color{red}(c} + {\color{red}v} {\color{green}h}{\color{red})}, \end{align} $$

where in this simple example,

- ${\color{blue}g(b + w \cdot )}$ is the encoder, and

- ${\color{red}\sigma(c + v \cdot )}$ is the decoder.

For binary labels, we can use a simple cross entropy as the loss.

Code

See Lippe1.

wiki/machine-learning/generative-models/autoencoder:L Ma (2021). 'Generative Model: Auto-Encoder', Datumorphism, 08 April. Available at: https://datumorphism.leima.is/wiki/machine-learning/generative-models/autoencoder/.