Layer Norm

Layer norm is a normalization method to enable better training1.

Layer normalization (LayerNorm) is a technique to normalize the distributions of intermediate layers. It enables smoother gradients, faster training, and better generalization accuracy.

Quote from Xu et al. 20191

The key of layer norm is to normalize the input to the layer using the mean and standard deviation.

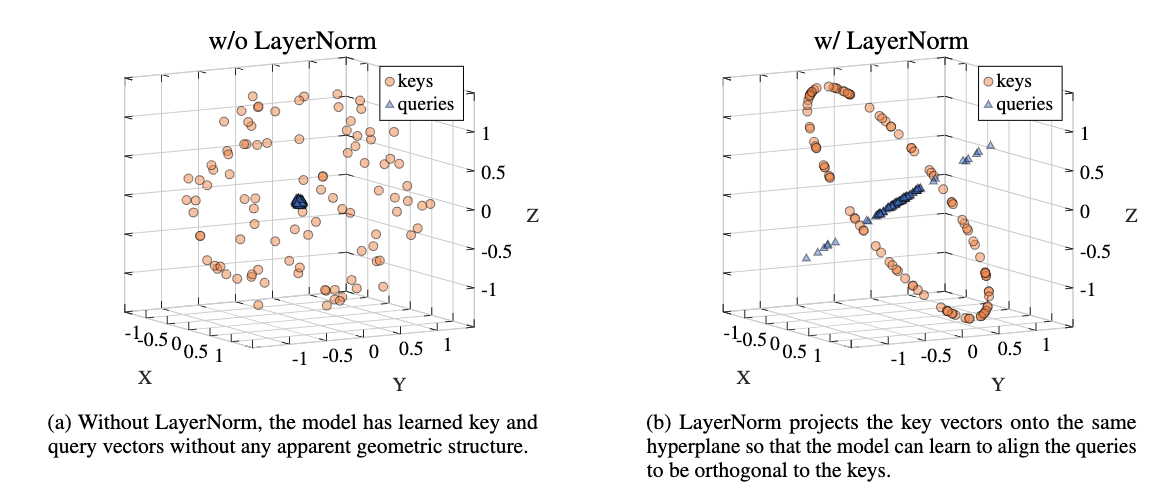

Layer norm plays two roles in neural networks:

- Projects the key vectors onto a hyperplane.

- Scales the key vectors to have the same length.

Xu et al. 2019

Planted:

by L Ma;

References:

- Ba JL, Kiros JR, Hinton GE. Layer Normalization. arXiv [stat.ML]. 2016. Available: http://arxiv.org/abs/1607.06450

- Brody S, Alon U, Yahav E. On the expressivity role of LayerNorm in Transformers’ attention. arXiv [cs.LG]. 2023. Available: http://arxiv.org/abs/2305.02582

- Xu2019 Xu J, Sun X, Zhang Z, Zhao G, Lin J. Understanding and Improving Layer Normalization. Advances in Neural Information Processing Systems. 2019;32. Available: https://proceedings.neurips.cc/paper_files/paper/2019/file/2f4fe03d77724a7217006e5d16728874-Paper.pdf

Similar Articles:

wiki/machine-learning/neural-networks/layer-norm/index Links to:L Ma (2021). 'Layer Norm', Datumorphism, 02 April. Available at: https://datumorphism.leima.is/wiki/machine-learning/neural-networks/layer-norm/.