f-GAN

The essence of [[GAN]] GAN The task of GAN is to generate features $X$ from some noise $\xi$ and class labels $Y$, $$\xi, Y \to X.$$ Many different GANs are proposed. Vanilla GAN has a simple structure with a single discriminator and a single generator. It uses the minmax game setup. However, it is not stable to use minmax game to train a GAN model. WassersteinGAN was proposed to solve the stability problem during training1. More advanced GANs like BiGAN and ALI have more complex structures. Vanilla GAN Minmax Game … is comparing the generated distribution $p_G$ and the data distribution $p_\text{data}$. The vanilla GAN considers the Jensen-Shannon divergence $\operatorname{D}\text{JS}(p\text{data}\Vert p_{G})$. The discriminator ${\color{green}D}$ serves the purpose of forcing this divergence to be small.

There exists a more generic form of JS divergence, which is called [[f-divergence]] f-Divergence The f-divergence is defined as1 $$ \operatorname{D}_f = \int f\left(\frac{p}{q}\right) q\mathrm d\mu, $$ where $p$ and $q$ are two densities and $\mu$ is a reference distribution. Requirements on the generating function The generating function $f$ is required to be convex, and $f(1) =0$. For $f(x) = x \log x$ with $x=p/q$, f-divergence is reduced to the KL divergence $$ \begin{align} &\int f\left(\frac{p}{q}\right) q\mathrm d\mu \\ =& \int \frac{p}{q} \log \left( \frac{p}{q} \right) \mathrm d\mu … 1. f-GAN obtains the model by estimating the f-divergence between the data distribution and the generated distribution2.

Variational Divergence Minimization

The Variational Divergence Minimization (VDM) extends the variational estimation of f-divergence2. VDM searches for the saddle point of an objective $F({\color{red}\theta}, {\color{blue}\omega})$, i.e., min w.r.t. $\theta$ and max w.r.t ${\color{blue}\omega}$, where ${\color{red}\theta}$ is the parameter set of the generator ${\color{red}Q_\theta}$, and ${\color{blue}\omega}$ is the parameter set of the variational approximation to estimate f-divergence, ${\color{blue}T_\omega}$.

The objective $F({\color{red}\theta}, {\color{blue}\omega})$ is related to the choice of $f$ in f-divergence and the variational functional ${\color{blue}T}$,

$$ \begin{align} & F(\theta, \omega)\\ =& \mathbb E_{x\sim p_\text{data}} \left[ {\color{blue}T_\omega}(x) \right] - \mathbb E_{x\sim {\color{red}Q_\theta} } \left[ f^*({\color{blue}T_\omega}(x)) \right] \\ =& \mathbb E_{x\sim p_\text{data}} \left[ g_f(V_{\color{blue}\omega}(x)) \right] - \mathbb E_{x\sim {\color{red}Q_\theta} } \left[ f^*(g_f(V_{\color{blue}\omega}(x))) \right]. \end{align} $$

In the above objective,

- $f^ *$ is the Legendre–Fenchel transformation of $f$, i.e., $f^ * (t) = \operatorname{sup} _ {u\in \mathrm{dom} _ f}\left\{ ut - f(u) \right\}$.

$T$, $g_f$, $V$

The function $T$ is used to estimate the lower bound of f-divergence[^Nowozin2016].

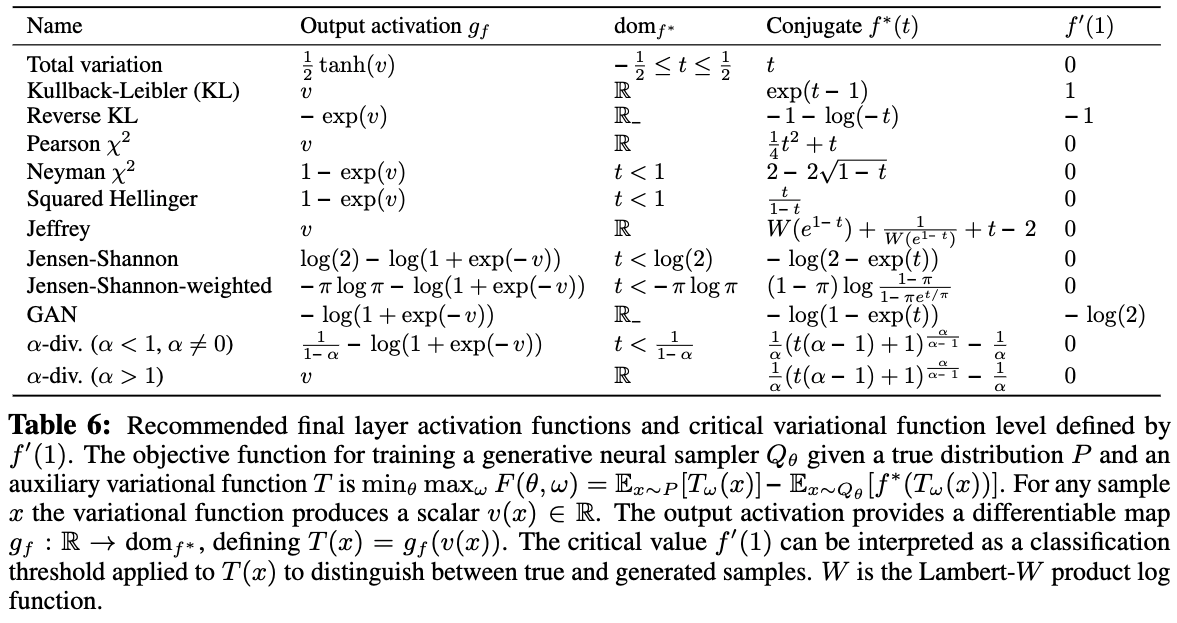

Nowozin et al provided a table for $g_f$ and $V$[^Nowozin2016].

We estimate

- $\mathbb E_{x\sim p_\text{data}}$ by sampling from the mini-batch, and

- $\mathbb E_{x\sim {\color{red}Q_\theta} }$ by sampling from the generator.

Reduce to GAN

The VDM loss can be reduced to the [[loss of GAN]] GAN The task of GAN is to generate features $X$ from some noise $\xi$ and class labels $Y$, $$\xi, Y \to X.$$ Many different GANs are proposed. Vanilla GAN has a simple structure with a single discriminator and a single generator. It uses the minmax game setup. However, it is not stable to use minmax game to train a GAN model. WassersteinGAN was proposed to solve the stability problem during training1. More advanced GANs like BiGAN and ALI have more complex structures. Vanilla GAN Minmax Game … by setting2

$$ \begin{align} \log {\color{green}D_\omega} =& g_f(V_{\color{blue}\omega}(x)) \\ \log \left( 1 - {\color{green}D_\omega} \right) =& -f^*\left( g_f(V_{\color{blue}\omega}(x)) \right). \end{align} $$

It is straightforward to validate that the following result is a solution to the above set of equations,

$$ g_f(V) = \log \frac{1}{1 + e^{-V}}. $$

f-divergence_wiki Contributors to Wikimedia projects. F-divergence. In: Wikipedia [Internet]. 17 Jul 2021 [cited 6 Sep 2021]. Available: https://en.wikipedia.org/wiki/F-divergence#Instances_of_f-divergences ↩︎

Nowozin2016 Nowozin S, Cseke B, Tomioka R. f-GAN: Training Generative Neural Samplers using Variational Divergence Minimization. arXiv [stat.ML]. 2016. Available: http://arxiv.org/abs/1606.00709 ↩︎ ↩︎ ↩︎

- Liu2020 Liu X, Zhang F, Hou Z, Wang Z, Mian L, Zhang J, et al. Self-supervised Learning: Generative or Contrastive. arXiv [cs.LG]. 2020. Available: http://arxiv.org/abs/2006.08218

- Nowozin2016 Nowozin S, Cseke B, Tomioka R. f-GAN: Training Generative Neural Samplers using Variational Divergence Minimization. arXiv [stat.ML]. 2016. Available: http://arxiv.org/abs/1606.00709

- f-divergence_wiki Contributors to Wikimedia projects. F-divergence. In: Wikipedia [Internet]. 17 Jul 2021 [cited 6 Sep 2021]. Available: https://en.wikipedia.org/wiki/F-divergence#Instances_of_f-divergences

- convex_conjugate_wiki Contributors to Wikimedia projects. Convex conjugate. In: Wikipedia [Internet]. 20 Feb 2021 [cited 7 Sep 2021]. Available: https://en.wikipedia.org/wiki/Convex_conjugate

wiki/machine-learning/adversarial-models/f-gan Links to:L Ma (2021). 'f-GAN', Datumorphism, 08 April. Available at: https://datumorphism.leima.is/wiki/machine-learning/adversarial-models/f-gan/.