Feasibility of Learning

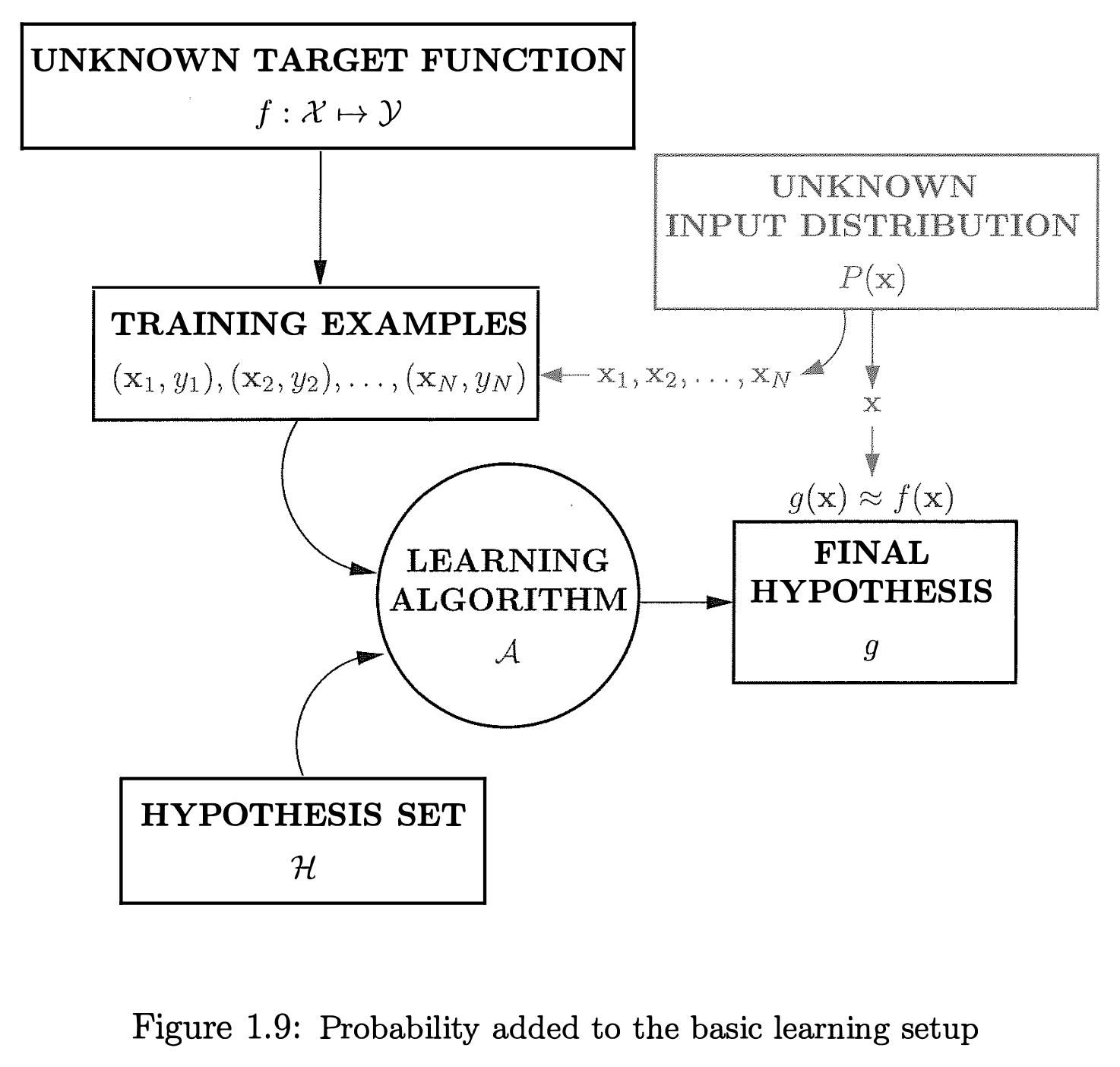

Why is learning from data even possible? To discuss this problem, we need a framework for learning. Operationally, we can think of learning as the following framework1.

Abu-Mostafa2012

Naive View

Naively speaking, a model should have two key properties,

- enough capacity to hold the necessary information embedded in the data, and

- a method to find the combination of parameters so that the model can generate/complete new data.

Most neural networks have enough capacity to hold the necessary information in the data2. The problem is, the capacity is so large. Why does backprop even work? How did backprop find a suitable set of parameters that can generalize?

Abu-Mostafa2012 Abu-Mostafa, Yaser S and Magdon-Ismail, Malik and Lin, Hsuan-Tien. Learning from Data. AMLBook; 2012. Available: https://www.semanticscholar.org/paper/Learning-From-Data-Abu-Mostafa-Magdon-Ismail/1c0ed9ed3201ef381cc392fc3ca91cae6ecfc698 ↩︎

Zhang2016 Zhang C, Bengio S, Hardt M, Recht B, Vinyals O. Understanding deep learning requires rethinking generalization. arXiv [cs.LG]. 2016. Available: http://arxiv.org/abs/1611.03530 ↩︎

- Abu-Mostafa2012 Abu-Mostafa, Yaser S and Magdon-Ismail, Malik and Lin, Hsuan-Tien. Learning from Data. AMLBook; 2012. Available: https://www.semanticscholar.org/paper/Learning-From-Data-Abu-Mostafa-Magdon-Ismail/1c0ed9ed3201ef381cc392fc3ca91cae6ecfc698

- Bernstein2021 Bernstein J. Machine learning is just statistics + quantifier reversal. In: jeremybernste [Internet]. [cited 1 Nov 2021]. Available: https://jeremybernste.in/writing/ml-is-just-statistics

- Guedj2019 Guedj B. A Primer on PAC-Bayesian Learning. arXiv [stat.ML]. 2019. Available: http://arxiv.org/abs/1901.05353

- Zhang2016 Zhang C, Bengio S, Hardt M, Recht B, Vinyals O. Understanding deep learning requires rethinking generalization. arXiv [cs.LG]. 2016. Available: http://arxiv.org/abs/1611.03530

wiki/learning-theory/feasibility-of-learning Links to:L Ma (2021). 'Feasibility of Learning', Datumorphism, 10 April. Available at: https://datumorphism.leima.is/wiki/learning-theory/feasibility-of-learning/.