Rosenblatt's Perceptron

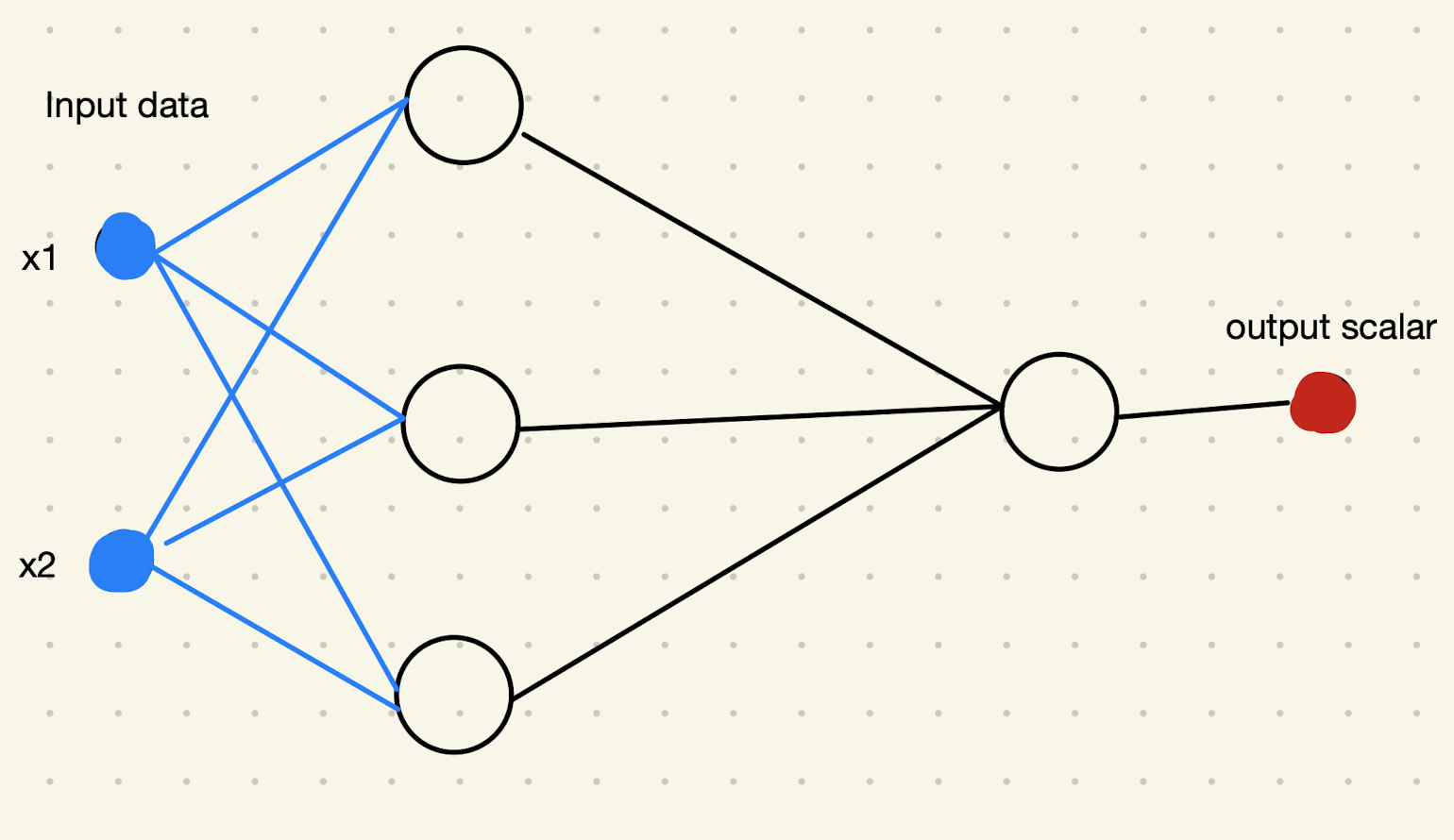

Rosenblatt’s perceptron connects McCulloch-Pitts neurons in levels.

Rosenblatt proposed that we fix all the weights and leave the weights of the last neuron free.

The first few layers but the last layer is used as a transformation of the input data ${x_1, \cdots, x_i, \cdots, x_N}$ into a new space ${z_1, \cdots, z_i, \cdots, z_{N’}}$. The classification is done on the ${z_1, \cdots, z_i, \cdots, z_{N’}}$ space by tuning the last neuron.

Initially, we set $w=0$. At step $k$,

- if the sign prediction by the perceptron $( w_k \cdot z_{k+1} )$ is the same as the data $y_{k+1}$, i.e., $y_{k+1} \cdot ( w_k \cdot z_{k+1} ) >0$, we keep the last neuron unchanged,

- if the sign is different $y_{k+1} \cdot ( w_k \cdot z_{k+1} ) <0$, we change the slope to $w_{k+1} = w_k + y_{k+1}z_{k+1}$ because $y_{k+1} \cdot ( w_{k+1} \cdot z_{k+1} ) = y_{k+1} \cdot ( w_k \cdot z_{k+1} ) + y_{k+1}^2 z_{k+1}^2$. In this way we are making sure that $y_{k+1} \cdot ( w_{k+1} \cdot z_{k+1} )>y_{k+1} \cdot ( w_k \cdot z_{k+1} )$ so that we can match the predictions and the actual classification.

Planted:

by L Ma;

References:

Similar Articles:

cards/machine-learning/neural-networks/rosenblatt-perceptron Links to:LM (2021). 'Rosenblatt's Perceptron', Datumorphism, 02 April. Available at: https://datumorphism.leima.is/cards/machine-learning/neural-networks/rosenblatt-perceptron/.